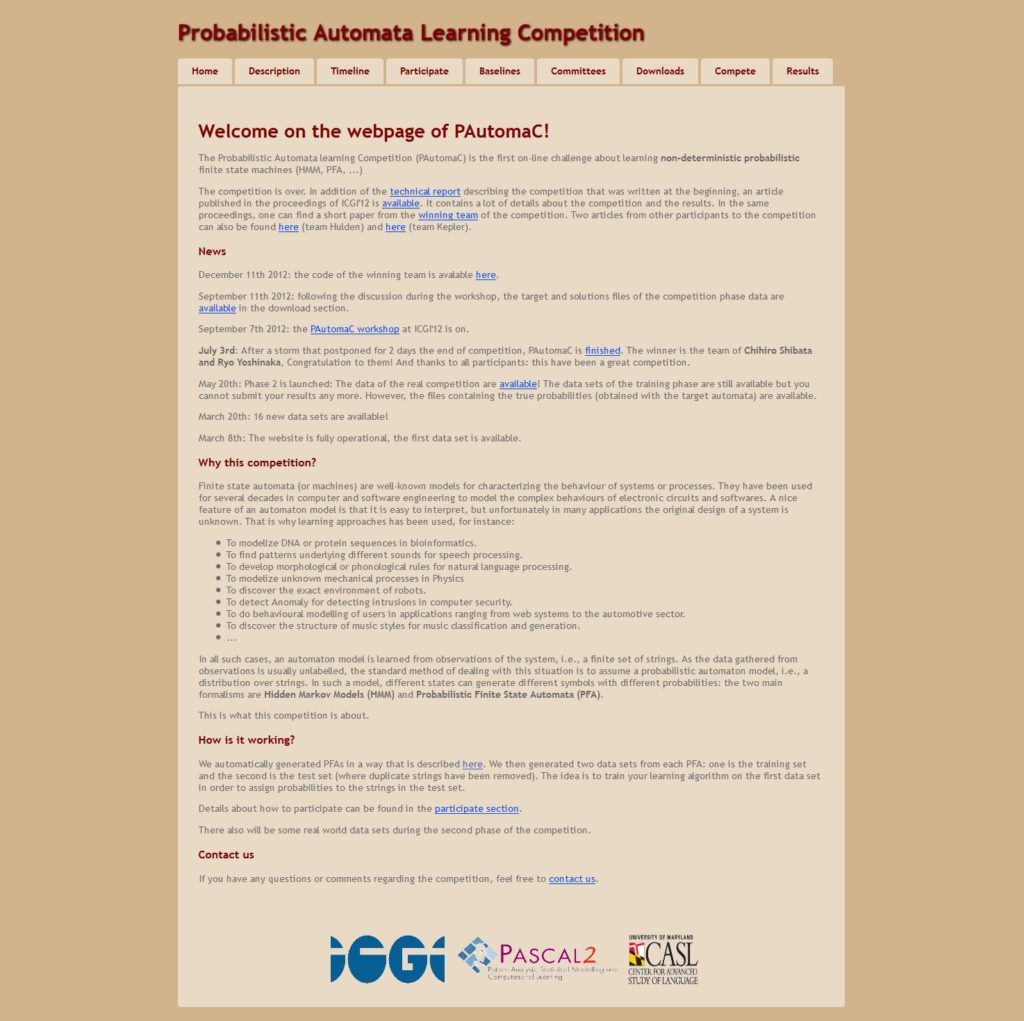

The Probabilistic Automata learning Competition (PAutomaC) is the first on-line challenge about learning non-deterministic probabilistic finite state machines (HMM, PFA, …)

The competition is over. In addition of the technical report describing the competition that was written at the beginning, an article published in the proceedings of ICGI’12 is available. It contains a lot of details about the competition and the results. In the same proceedings, one can find a short paper from the winning team of the competition. Two articles from other participants to the competition can also be found here (team Hulden) and here (team Kepler).

News

December 11th 2012: the code of the winning team is avalable here.

September 11th 2012: following the discussion during the workshop, the target and solutions files of the competition phase data are available in the download section.

September 7th 2012: the PAutomaC workshop at ICGI’12 is on.

July 3rd: After a storm that postponed for 2 days the end of competition, PAutomaC is finished. The winner is the team of Chihiro Shibata and Ryo Yoshinaka, Congratulation to them! And thanks to all participants: this have been a great competition.

May 20th: Phase 2 is launched: The data of the real competition are available! The data sets of the training phase are still available but you cannot submit your results any more. However, the files containing the true probabilities (obtained with the target automata) are available.

March 20th: 16 new data sets are available!

March 8th: The website is fully operational, the first data set is available.

Why this competition?

Finite state automata (or machines) are well-known models for characterizing the behaviour of systems or processes. They have been used for several decades in computer and software engineering to model the complex behaviours of electronic circuits and softwares. A nice feature of an automaton model is that it is easy to interpret, but unfortunately in many applications the original design of a system is unknown. That is why learning approaches has been used, for instance:

- To modelize DNA or protein sequences in bioinformatics.

- To find patterns underlying different sounds for speech processing.

- To develop morphological or phonological rules for natural language processing.

- To modelize unknown mechanical processes in Physics

- To discover the exact environment of robots.

- To detect Anomaly for detecting intrusions in computer security.

- To do behavioural modelling of users in applications ranging from web systems to the automotive sector.

- To discover the structure of music styles for music classification and generation.

- …

In all such cases, an automaton model is learned from observations of the system, i.e., a finite set of strings. As the data gathered from observations is usually unlabelled, the standard method of dealing with this situation is to assume a probabilistic automaton model, i.e., a distribution over strings. In such a model, different states can generate different symbols with different probabilities: the two main formalisms are Hidden Markov Models (HMM) and Probabilistic Finite State Automata (PFA).

This is what this competition is about.

How is it working?

We automatically generated PFAs in a way that is described here. We then generated two data sets from each PFA: one is the training set and the second is the test set (where duplicate strings have been removed). The idea is to train your learning algorithm on the first data set in order to assign probabilities to the strings in the test set.

Details about how to participate can be found in the participate section.

There also will be some real world data sets during the second phase of the competition.