Automatic text understanding has been an unsolved research problem for many years. This partially results from the dynamic and diverging nature of human languages, which ultimately results in many different varieties of natural language. This variations range from the individual level, to regional and social dialects, and up to seemingly separate languages and language families.

However, in recent years there have been considerable achievements in data driven approaches to computational linguistics exploiting the redundancy in the encoded information and the structures used. Those approaches are mostly not language specific or can even exploit redundancies across languages.

This progress in cross-lingual technologies is largely due to the increased availability of multilingual data in the form of static repositories or streams of documents. In addition parallel and comparable corpora like Wikipedia are easily available and constantly updated. Finally, cross-lingual knowledge bases like DBpedia can be used as an Interlingua to connect structured information across languages. This helps at scaling the traditionally monolingual tasks, such as information retrieval and intelligent information access, to multilingual and cross-lingual applications.

From the application side, there is a clear need for such cross-lingual technology and services. Available systems on the market are typically focused on multilingual tasks, such as machine translation, and don't deal with cross-linguality. A good example is one of the most popular news aggregators, namely Google News that collects news isolated per individual language. The ability to cross the border of a particular language would help many users to consume the breadth of news reporting by joining information in their mother tongue with information from the rest of the world.

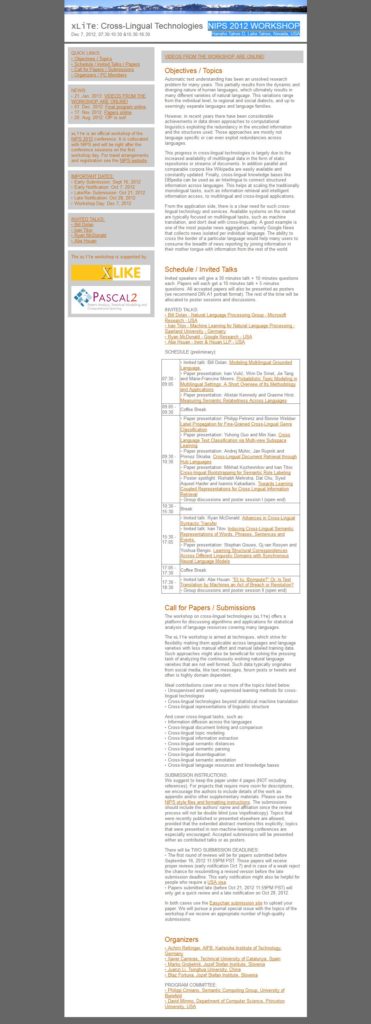

xLiTe: Cross-Lingual Technologies