Abstract

Pest monitoring by using a data-driven computer vision technique in directing the extension officers support services across sub-Sahara Africa in a real-time pest damage assessment and recommendation support system for small scale tomato farmers.

Problem situation

Agriculture is a vital tool for sustainable development in Africa. A high yielding crop such as tomato with high economic returns can greatly increase smallholder farmers income when well managed. Despite the socio-economic importance of tomato that produce market opportunity, food and nutritional security for smallholder grower, it is apparently constrained by the recent invasion of tomato pest Tuta absoluta that is devastating tomato yield causing loss of up to 100% hence jeopardizing livelihoods of millions of growers in sub-Sahara Africa [1]. This puts small scale farmers at risk of losing income. Tuta absoluta, has swept across Africa, leading to the declaration of a state of emergency [2][3] in some of the continent’s main tomato producing areas. Furthermore, the lack of adequate capacity to detect and implement management measures. A shift from a reactive to a more proactive intervention based on the internationally recognized threestage approach of prevention, early detection and control is needed to be adopted. This work focus on early detection, a novel approach in initiatives to strengthen phytosanitary capacity and systems to help solve Tuta absoluta devastation.

Objective

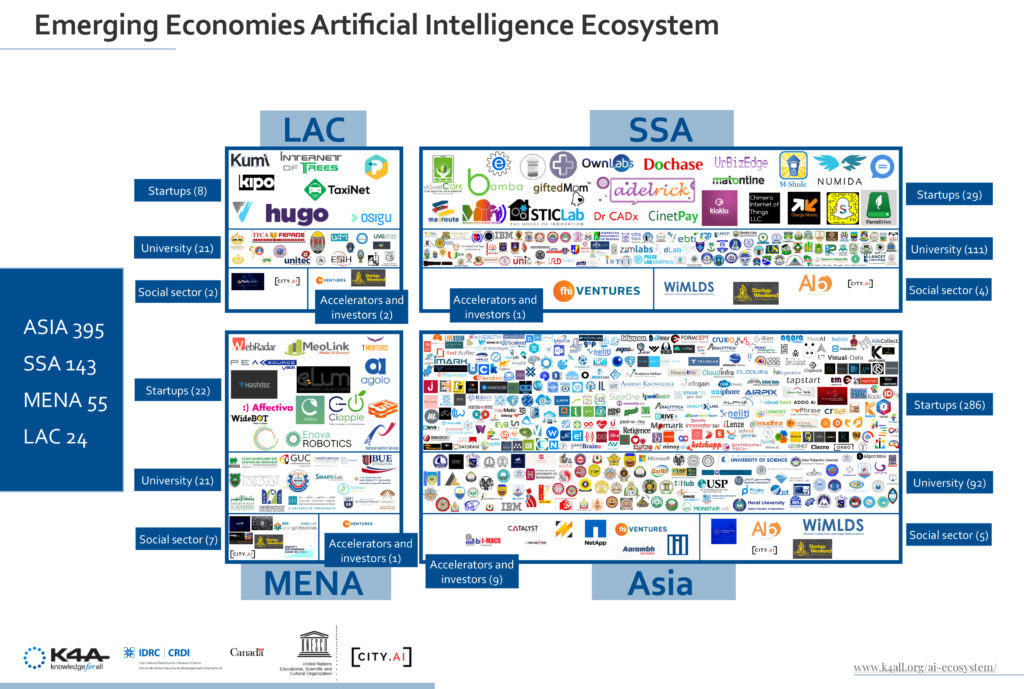

This work will radically transform Tuta absoluta pest monitoring by using a data-driven computer vision technique in directing the extension officers support services [4] across sub- Sahara Africa in a real-time pest damage assessment and recommendation support system for small scale tomato farmers. To the best of our knowledge, it will be the first alternative approach using computer vision to help aviate the current alarming situation of invasive tomato pest Tuta absoluta by providing solutions that could help in early management and control. We aim to increase the effectiveness of limited farm-level extension support by leveraging emerging technological [5] and extension support to targeted affected areas (based on damage status maps) using our developed models based on quantified images of pest damage.

Justification

Pests and diseases are major threat to smallholder farmers [6] however, Tuta absoluta control still rely on low-speed inefficient manual identification and a few on the support of limited number of agriculture extension officers [7]. With application of computer vision based image recognition technology, early identification and quantification of Tuta absoluta damage status using recent improvements of tele-infrastructure and information technology will give new tools to deploy the start-of-art of computer vision [8] [9] [10] therefore giving a more targeted control needs to be taken in phytosanitary measures of Tuta absoluta.

Preliminary works and Expected outcomes

Our hypothesis is that current emerging technology can be integrated into a decision platform for tomato pest management and can provide diagnostics in real-time at minimal human capacity training. However, we leverage to extend and integrate alternative support such as recent discovery of a promising pesticide by our fellow team member, Ms. Never. We also anticipate that advice from limited extension service can be delivered to large numbers of smallholder farmers. We fully expect the proposed work to succeed. To achieve this, the first steps of this work have already been completed over the last 12 months through field work and in-house experiment to collect data using cameras and drones in affected areas of Arusha and Morogoro, Tanzania. We have taken and labeled over 4,000 images of tomatoes and multispectral images (RGB, infra-red, red edge allowing for vegetation indices data collection like NDVI) and trained convolutional neural network model. The models can classify Tuta absoluta damage cases. This work also emerged as computer vision for global challenge workshop (CV4GC) winner presented at Computer Vision Pattern Recognition (CVPR). The multidisciplinary research team and links to major key players such as Sokoine University of Agriculture, NM-AIST, agriculture extension officers have helped in initial works. A combination of different technical skills and background could be the best approach in tackling the apparent state-of-emergency of Tuta absoluta invasion. Since we expect our work to have major impact, we will test how Tuta absoluta pest damage map assessment could increase yield in tomato value chains in Tanzania and sub-Sahara Africa.

Reference

[1] Z. Never, A. N. Patrick, C. Musa, and M. Ernest, “Tomato Leafminer, Tuta absoluta (Meyrick 1917), an emerging agricultural pest in Sub-Saharan Africa: Current and prospective management strategies,”African J. Agric. Res., vol. 12, no. 6, pp. 389–396, 2017.

[2] Nigeria’s Kaduma state declares ‘tomato emergency’ [Online] Available https://www.bbc.com/news/world-africa-36369015 Accessed: 30th July, 2018.

[3] Invasive Africa: Tuta absoluta. [Online] Available https://www.youtube.com/watch?v=_dubR2qoW8k Accessed: 24th September, 2018.

[4] T. J. Maginga, T. Nordey, and M. Ally, “Extension System for Improving the Management of Vegetable Cropping Systems,” vol. 3, no. 4, 2018.

[5] Zahedi, Seyed Reza, and Seyed Morteza Zahedi. “Role of information and communication technologies in modern agriculture.” International Journal of Agriculture and Crop Sciences 4, no. 23 (2012): 1725- 1728.

[6] V. Mutayoba, T. Mwalimu, and N. Memorial, “Assessing tomato farming and marketing among smallholders in high potential agricultural areas of Tanzania Venance ,” no. July, pp. 0–17, 2017.

[7] R. Y. A. Guimapi, S. A. Mohamed, G. O. Okeyo, F. T. Ndjomatchoua, S. Ekesi, and H. E. Z. Tonnang, “Modeling the risk of invasion and spread of Tuta absoluta in Africa,” Ecol. Complex., vol. 28, pp. 77–93, 2016.

[8] Y. Lecun, Y. Bengio, and G. Hinton, “Deep learning,” 2015.

[9] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,”

pp. 1–14, 2015.

[10] H. Kaiming, Z. Xiangyu, R. Shaoqing, and S. Jian, “Deep Residual Learning for Image Recognition.”

[11] H. Peng, Y. Bayram, L. Shaltiel-Harpaz, F. Sohrabi, A. Saji, U.T. Esenali, A. Jalilov . “Tuta absoluta

continues to disperse in Asia: damage, ongoing management and future challenges.” Journal of Pest

Science(2018): 1-11.